(Disclaimer – there may be some better reason for this that I just haven’t come across yet. I am still learning this stuff, and may end up being wrong. Even so … )

The issue of explanations of distinghishability wrt particles is something that bugs me to no end. I cannot recommend enough the following paper by Jaynes [1] which as far as I can tell is the one of the only things I’ve read that gets this *right*.

[1] Jaynes, Edwin T. “The Gibbs Paradox.” Maximum Entropy and Bayesian Methods, 1992, 1–22.

Eliezer Yudkowsky has a series explaining the basics of what must be going on with quantum physics from a wavefunction-realist perspective (which is my own perspective as well), but I’ve been seriously wondering lately whether quantum indistinguishability and its supposed proofs suffer from the same problems/sloppiness as classical indistinguishability.

Here is his post: http://lesswrong.com/lw/ph/can_you_prove_two_particles_are_identical/

Here is my comment/question/proposition:

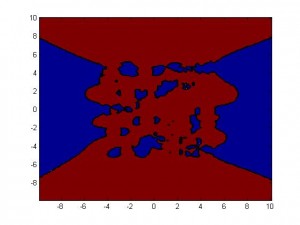

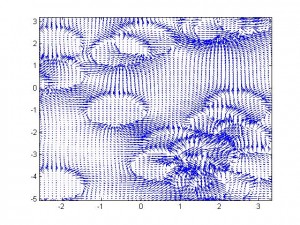

I have a counter-hypothesis: If the universe did distinguish between photons, but we didn’t have any tests which could distinguish between photons, what this physically means is that our measuring devices, in their quantum-to-classical transitions (yes, I know this is a perception thing in MWI), are what is adding the amplitudes before taking the squared modulus. Our measurers can’t distinguish, which is why we can get away with representing the hidden “true wavefunction” (or object carrying similar information) with a symmetric wavefunction. If we invented a measurement device which was capable of distinguishing photons, this would mean that photon A and photon B striking it would dump amplitude into distinct states in the device rather than the same state, and we would no longer be able to represent the photon field with a symmetric wavefunction if we wanted to make predictions.

I think quantum physicists here are making the same mistake that lead to the Gibbs paradox in classical phyiscs. Of course, my textbook in classical thermodynamics tried to sweep the Gibbs paradox under the quantum rug, and completely missed the point of what it was telling us about the subjective nature of classical entropy. Quantum physics is another deterministic reversible state-machine, so I don’t see why it is different in principle from a “classical world”.

While it is true that a wavefunction or something very much like it must be what the universe is using to do it’s thing (is the territory), it isn’t necessarily true that our wavefunction (the one in our heads that we are using to explain the set of measurements which we can make) contains the same information. It could be a projection of some sort, limited by what our devices can distinguish. This is a not-in-principle-complete map of the territory.

PS – not that I’m holding my breath that we’ll invent a device that can distingish between “electron isotopes” or other particles (their properties are very regular so far), but it’s important to understand what is in principle possible so your mind doesn’t break if we someday end up doing just that.

PPS – I really like the comment by Dmytry:

Well, in principle, it can happen that two particles would obey this statistics, and be different in some subtle way, and the statistics would be broken if that subtle difference is allowed to interact with the environment, but not before. I think you can see how it can happen under MWI. Statistics is affected not by whenever particles are ‘truly identical’ but by whenever they would have interacted with you in identical way so far (including interactions with environment – you don’t have to actually measure this – hitting the wall works just fine).

Furthermore, two electrons are not identical because they are in different positions and/or have different spins (‘are in different states’). One got to very carefully define what ‘two electrons’ mean. The language is made for discussing real world items, and has a lot of built in assumptions, that do not hold in QM.

edit: QFT is a good way to see it. A particle is a set of coupled excitations in fields. Particle can be coupled interaction of excitations in fields A B C D … and the other can be A B C D E where the E makes very little difference. E.g. protons and neutrons, are very similar except for the charge. Under interactions that don’t distinguish E, the particles behave as if they got statistics as if they were identical.

Recent Comments: